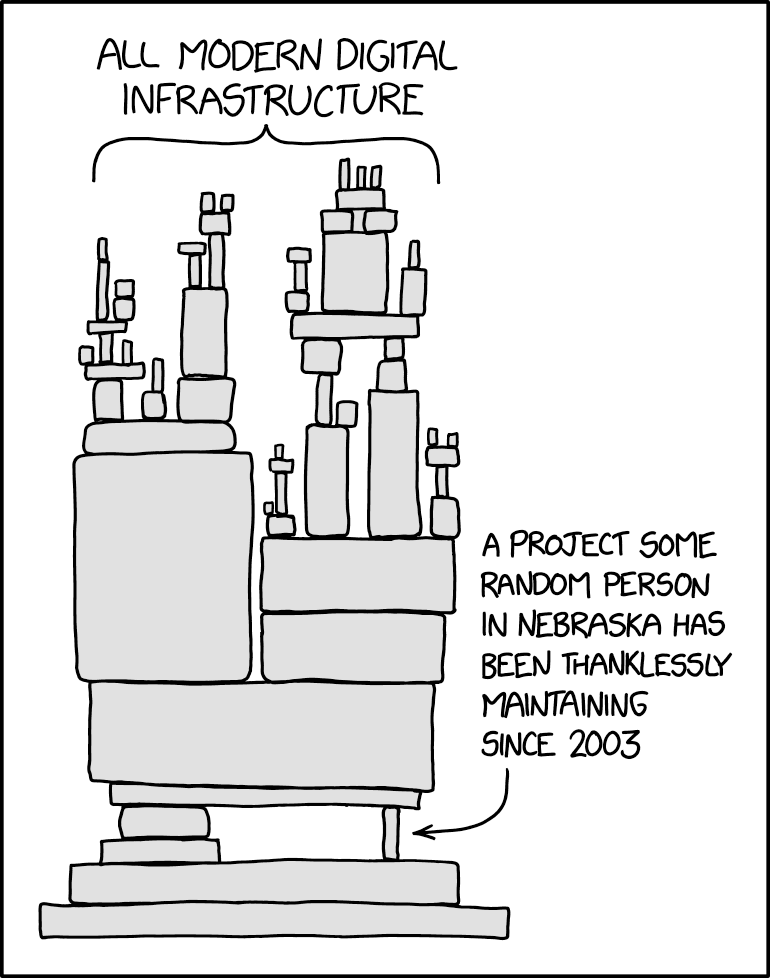

This picture shows three meteorological shelters next to each other in Murcia (Spain). The rightmost shelter is a replica of the Montsouri (French) screen, in use in Spain and many European countries in the late 19th century and early 20th century. Leftmost, Stevenson screen equipped with conventional meteorological instruments, a set-up used globally for most of the 20th century. In the middle, Stevenson screen equipped with automatic sensors. The Montsouri screen is better ventilated, but because some solar radiation can get onto the thermometer it registers somewhat higher temperatures than a Stevenson screen. Picture: Project SCREEN, Center for Climate Change, Universitat Rovira i Virgili, Spain.

The

instrumental climate record is human cultural heritage, the product

of the diligent work of many generations of people all over the

world. But changes in the way temperature was measured and in the

surrounding of weather stations can produce spurious trends. An

international team, with participation of the University Rovira i

Virgili (Spain), State Meteorological Agency (AEMET, Spain) and

University of Bonn (Germany), has made a great endeavour to provide

reliable tests for the methods used to computationally eliminate such

spurious trends. These so-called “homogenization methods“ are a

key step to turn the enormous effort of the observers into accurate

climate change data products. The results have been published in the

prestigious Journal of Climate of the American Meteorological

Society. The research was funded by the Spanish Ministry of Economy

and Competitiveness.

Climate

observations often go back more than a century, to times before we

had electricity or cars. Such long time spans make it virtually

impossible to keep the measurement conditions the same across time.

The best-known problem is the growth of cities around urban weather

stations. Cities tend to be warmer, for example due to reduced

evaporation by plants or because high buildings block cooling. This

can be seen comparing urban stations with surrounding rural stations.

It is less talked about, but there are similar problems due to the

spread of irrigation.

The

most common reason for jumps in the observed data are relocations of

weather stations. Volunteer observers tend to make observations near

their homes; when they retire and a new volunteer takes over the

tasks, this can produce temperature jumps. Even for professional

observations keeping the locations the same over centuries can be a

challenge either due to urban growth effects making sites unsuitable

or organizational changes leading to new premises. Climatologist from

Bonn, Dr. Victor Venema, one of the authors: “a quite typical

organizational change is that weather offices that used to be in

cities were transferred to newly build airports needing observations

and predictions. The weather station in Bonn used to be on a field in

village Poppelsdorf, which is now a quarter of Bonn and after several

relocations the station is currently at the airport Cologne-Bonn.”

For

global trends, the most important changes are technological changes

of the same kinds and with similar effects all over the world. Now we

are, for instance, in a period with widespread automation of the

observational networks.

Appropriate

computer programs for the automatic homogenization of climatic time

series are the result of several years of development work. They work

by comparing nearby stations with each other and looking for changes

that only happen in one of them, as opposed to climatic changes that

influence all stations.

To

scrutinize these homogenization methods the research team created a

dataset that closely mimics observed climate datasets including the

mentioned spurious changes. In this way, the spurious changes are

known and one can study how well they are removed by homogenization.

Compared to previous studies, the testing datasets showed much more

diversity; real station networks also show a lot of diversity due to

differences in their management. The researchers especially took care

to produce networks with widely varying station densities; in a dense

network it is easier to see a small spurious change in a station. The

test dataset was larger than ever containing 1900 station networks,

which allowed the scientists to accurately determine the differences

between the top automatic homogenization methods that have been

developed by research groups from Europe and the Americas. Because of

the large size of the testing dataset, only automatic homogenization

methods could be tested.

The

international author group found that it is much more difficult to

improve the network-mean average climate signals than to improve the

accuracy of station time series.

The

Spanish homogenization methods excelled. The method developed at the

Centre for Climate Change, Univ. Rovira i Virgili, Vila-seca, Spain,

by Hungarian climatologist Dr. Peter Domonkos was found to be the

best at homogenizing both individual station series and regional

network mean series. The method of the State Meteorological Agency

(AEMET), Unit of Islas Baleares, Palma, Spain, developed by Dr. José

A. Guijarro was a close second.

When

it comes to removing systematic trend errors from many networks, and

especially of networks where alike spurious changes happen in many

stations at similar dates, the homogenization method of the American

National Oceanic and Atmospheric Agency (NOAA) performed best. This

is a method that was designed to homogenize station datasets at the

global scale where the main concern is the reliable estimation of

global trends.

The earlier used Open Screen used at station Uccle in Belgium, with two modern closed thermometer Stevenson screens with a double-louvred walls in the background.

Quotes from participating researchers

Dr.

Peter Domonkos, who earlier was a weather observer and now writes a

book about time series homogenization: “This study has shown the

value of large testing datasets and demonstrates another reason why

automatic homogenization methods are important: they can be tested

much better, which aids their development.”

Prof.

Dr. Manola Brunet, who is the director of the Centre for Climate

Change, Univ. Rovira i Virgili, Vila-seca, Spain, Visiting Fellow at

the Climatic Research Unit, University of East Anglia, Norwich, UK

and Vice-President of the World Meteorological Services Technical

Commission said: “The study showed how important dense station

networks are to make homogenization methods powerful and thus to

compute accurate observed trends. Unfortunately, still a lot of

climate data needs to be digitized to contribute to an even better

homogenization and quality control.”

Dr.

Javier Sigró from the Centre for Climate Change, Univ. Rovira i

Virgili, Vila-seca, Spain: “Homogenization is often a first step

that allows us to go into the archives and find out what happened to

the observations that produced the spurious jumps. Better

homogenization methods mean that we can do this in a much more

targeted way.”

Dr.

José A. Guijarro: “Not only the results of the project may help

users to choose the method most suited to their needs; it also helped

developers to improve their software showing their strengths and

weaknesses, and will allow further improvements in the future.”

Dr.

Victor Venema: “In a previous similar study we found that

homogenization methods that were designed to handle difficult cases

where a station has multiple spurious jumps were clearly better.

Interestingly, this study did not find this. It may be that it is

more a matter of methods being carefully fine-tuned and tested.”

Dr.

Peter Domonkos: “The accuracy of homogenization methods will likely

improve further, however, we never should forget that the spatially

dense and high quality climate observations is the most important

pillar of our knowledge about climate change and climate

variability.”

Press releases

Spanish weather service, AEMET: Un equipo internacional de climatólogos estudia cómo minimizar errores en las tendencias climáticas observadas

URV university in Tarragona, Catalonian: Un equip internacional de climatòlegs estudia com es poden minimitzar errades en les tendències climàtiques observades

URV university, Spanish: Un equipo internacional de climatólogos estudia cómo se pueden minimizar errores en las tendencias climáticas observadas

URV university, English: An international team of climatologists is studying how to minimise errors in observed climate trends

Articles

Tarragona 21: Climatòlegs de la URV estudien com es poden minimitzar errades en les tendències climàtiques observades

Genius Science, French: Une équipe de climatologues étudie comment minimiser les erreurs dans la tendance climatique observée

Phys.org: A team of climatologists is studying how to minimize errors in observed climate trend