The amazing part was the Q&A afterwards. I was already surprised to see that I was one of the youngest ones, but had not anticipated that most of these people were engineers and economists, that is climate ostriches. As far as I remember, not one public question was interesting! All were trivially nonsense, I am sorry to have to write.

One of the ostriches showed me some graphs from a book by Fred Singer. Maybe I should go to an economics conference and cite some mercantile theorems of Colbert. I wonder how they would respond.

Afterwards I wondered whether translating their "arguments" against climatology to economy would help non-climatologists to see the weakness of the simplistic arguments. This post is a first attempt.

Seven translations

#1. That there is and always have been natural variability is not an argument again anthropogenic warming just like the pork cycle does not preclude economic growth.#2. One of our economist ostriches thought that there was no climate change in Germany because one mountain station shows cooling. That is about as stupid as claiming that there is no economic growth because one of your uncles had a decline in his salary.

#3. The claim that the temperature did not increase or that it was even cooling in the last century, that it is all a hoax of climatologists (read the evil Phil Jones) can be compared to a claim that the world did not get wealthier in the last century and that all statistics showing otherwise are a government cover-up. In both cases there are so many independent lines of research showing increases.

#4. The idea that CO2 is not a greenhouse gas and that increases in CO2 cannot warm the atmosphere is comparable to people claiming that their car does not need energy and that they will not drive less if gasoline becomes more expensive. Okay maybe this is not the best example, most readers will likely claim that gas prices have no influence on them, they have no choice and have to drive, but I would hope that economists know better. The strength of both effects needs study, but to suggest that there is no effect is beyond reason.

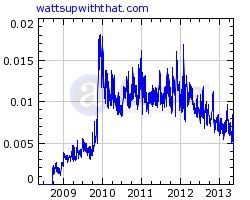

#5. Which climate change are you talking about, it stopped in 1998. That would be similar to the claim that since the banking crisis in 2008 markets are no longer efficient. Both arguments ignore the previous increases and deny the existence of variability.

#6. The science isn't settled. Both science have foundations that are broadly accepted in the profession (consensus) and problems that are not clear yet and that are a topic of research.

#7. The curve fitting exercises without any physics by the ostriches are similar to "technical analyses" of stock ratings.

[UPDATE. Inspired by a comment of David in the comments of Judith Curry on Climate Change (EconTalk)

#8 The year 1998 was a strong El Nino year and way above trend, well above nearby years. Choosing that window is similar to saying that stocks are a horrible investment because the market collapsed during the Great Depression.]

[UPDATE. Found a nice one.

Daniel Barkalow writes:

Looking at the global average surface temperature (which is what those graphs tend to show), is a bit like looking at someone's bank account. It's a pretty good approximation of how much money they have, but there's going to be a lot of variability, based on not knowing what outstanding bills the person has, and the person is presumably earning income continuously, but only getting paychecks at particular times. This mostly averages out, but there's the risk in looking at any particular moment that it's a really uncharacteristic moment.]

In particular, it seems to me that the "pause" idea is based on the fact that 1998 was warmer than nearly every year since, while neglecting that 1998 was warmer than 1997 or any previous year by more than 15 years of predicted warming. If this were someone's bank account, we'd guess that it reflected an event like having their home purchase fall through after selling their old home: some huge asset not usually included ended up in their bank account for a certain period before going back to wherever it was. You wouldn't then think the person had stopped saving, just because they hadn't saved up to a level that matches when their house money was in their bank account. You'd say that there was weird accounting in 1998, rather than an incredible gain followed by a mysterious loss.

One interesting question

The engineers and economists were wearing suits and the scientists were dressed more casually. Thus it was easy to find each other. One had an interesting challenge, which was at least new to me, he argued that the Fahrenheit scale, which was used a lot in the past is uncertain because it depends on the melting point of brine and the amount of salt put in the brine will vary.One would have to make quite an error with the brine to get rid of global warming, however. Furthermore, everyone would have had to make the same error, because a random errors would average out. And if there were a bias, this would be reduced by homogenization. And almost all of the anthropogenic warming was after the 1950-ies, where this problem no longer existed.

A related problem is that the definition of the Fahrenheit scale has changed and also that there are many temperature scales and in old documents it is not always clear which unit was used. Wikipedia lists these scales: Celsius, Delisle, Fahrenheit, Kelvin, Newton, Rankine, Réaumur and Rømer. Such questions are interesting to get the last decimal right, but no reason to become an ostrich.

Disturbing

I find it a bit disturbing that so many economists come up with so simple counter "arguments". They basically assume that climatologists are stupid or are conspiring against humanity. Expecting that for anther field of study makes one wonder where they got that expectation from and shines a bad light on economics.This was just a quick post, I would welcome ideas for improvements and additions in the comments. Did I miss any interesting analogies?